|

|

|

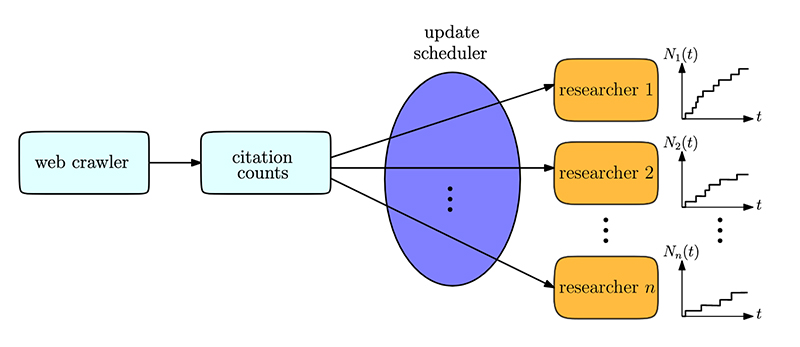

Web crawler finds and indexes scientific documents, from which citation counts are extracted upon examining their contents. Scheduler schedules updating citation counts of individual researchers based on their mean citations, and optionally, importance factors, subject to a total update rate. (Fig. 1 from the paper) |

|

Google Scholar, a citation index, crawls the web to find and index various items such as documents, images, videos, etc. As it focuses on scientific documents, Google Scholar further examines the contents of these documents to extract out citation counts for indexed papers. Google Scholar then updates citation counts of multitudes of individual researchers.

Google Scholar is, of course, resource-constrained; it cannot update all researchers all the time. If Google can update only a fraction of all researchers, how should it prioritize the updating process? Should it update researchers with higher mean citation rates more often, as their citation counts are subject to larger change per unit time? Or should it update researchers with lower mean citation rates more often to capture rarer, more informative changes?

In a new paper, Who Should Google Scholar Update More Often?, Professor Sennur Ulukus (ECE/ISR) and her graduate student Melih Bastopcu, model the citation count of each individual researcher as a counting process with a fixed mean. They use a metric similar to the age of information: the long-term average difference between the actual citation numbers and the citation numbers according to the latest updates. Ulukus and Bastopcu show that, to minimize this difference metric, the updater should allocate its total update capacity to researchers proportional to the square roots of their mean citation rates.

That is, more prolific researchers should be updated more often, but there are diminishing returns due to the concavity of the square root function. In a more general sense, the paper addresses the problem of optimal operation of a resource-constrained sampler that wishes to track multiple independent counting processes in a way that is as up to date as possible.

Related Articles:

Five Clark School authors part of new 'Age of Information' book

Alum Ahmed Arafa wins NSF CAREER Award

Bastopcu and Ulukus build model for real-time timely tracking of COVID-19 infection and recovery

Buyukates and Bastopcu win Best Student Paper Award at IEEE SPAWC 2021

Researchers balance information quality and freshness in information update system design

Ephremides leads new NSF Age of Information project

Barg honored with 2024 IEEE Richard W. Hamming Medal

Barg is PI for new quantum LDPC codes NSF grant

Narayan receives NSF funding for shared information work

Forthcoming information-theoretic cryptography book co-written by alum Tyagi and former visitor Watanabe

February 24, 2020

|